-

2 June 2021

- Airflow

Apache has released a new version of Airflow. Everyone who uses this tool knows that minor changes can transform how DAGs work or totally block them. Apache Airflow version 2.0 is not yet available on cloud platforms, but Data Pipeline is our domain so we review what’s new. Here we’ll describe our first impressions and what changes you should be prepared for in the main version.

Airflow 2.0 has arrived – the biggest differences between Airflow 1.10.x and 2.0

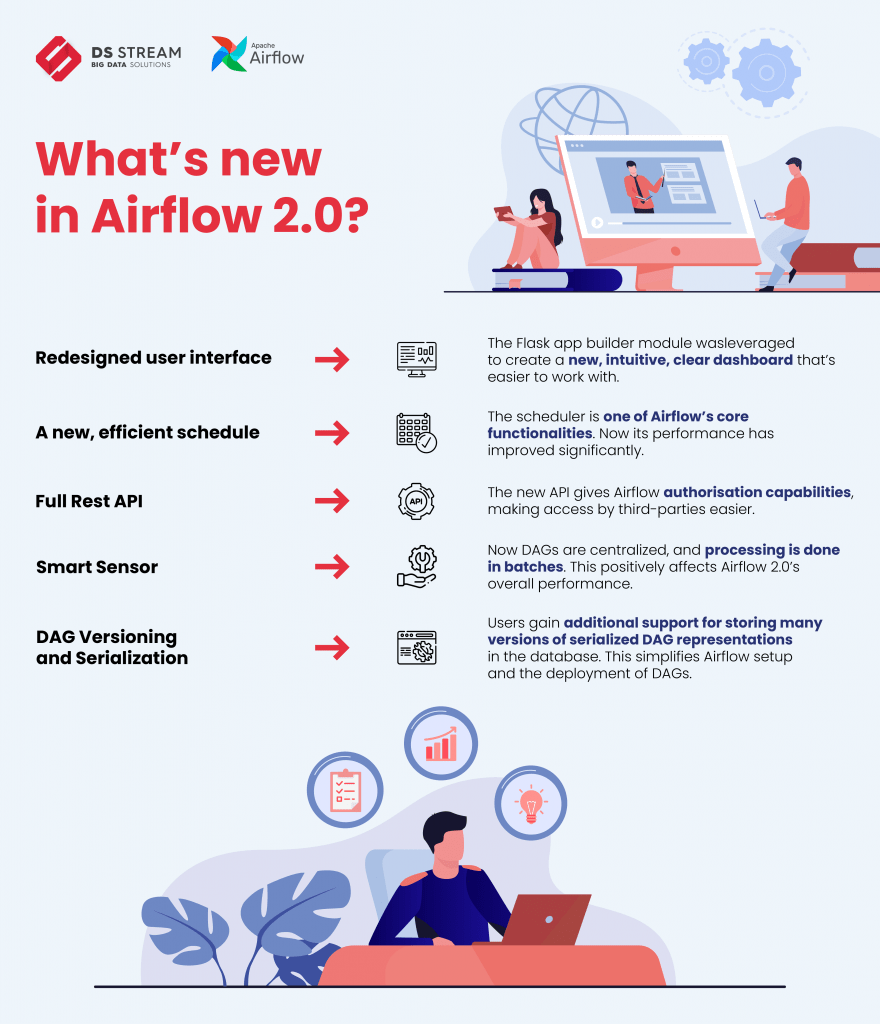

New User interface

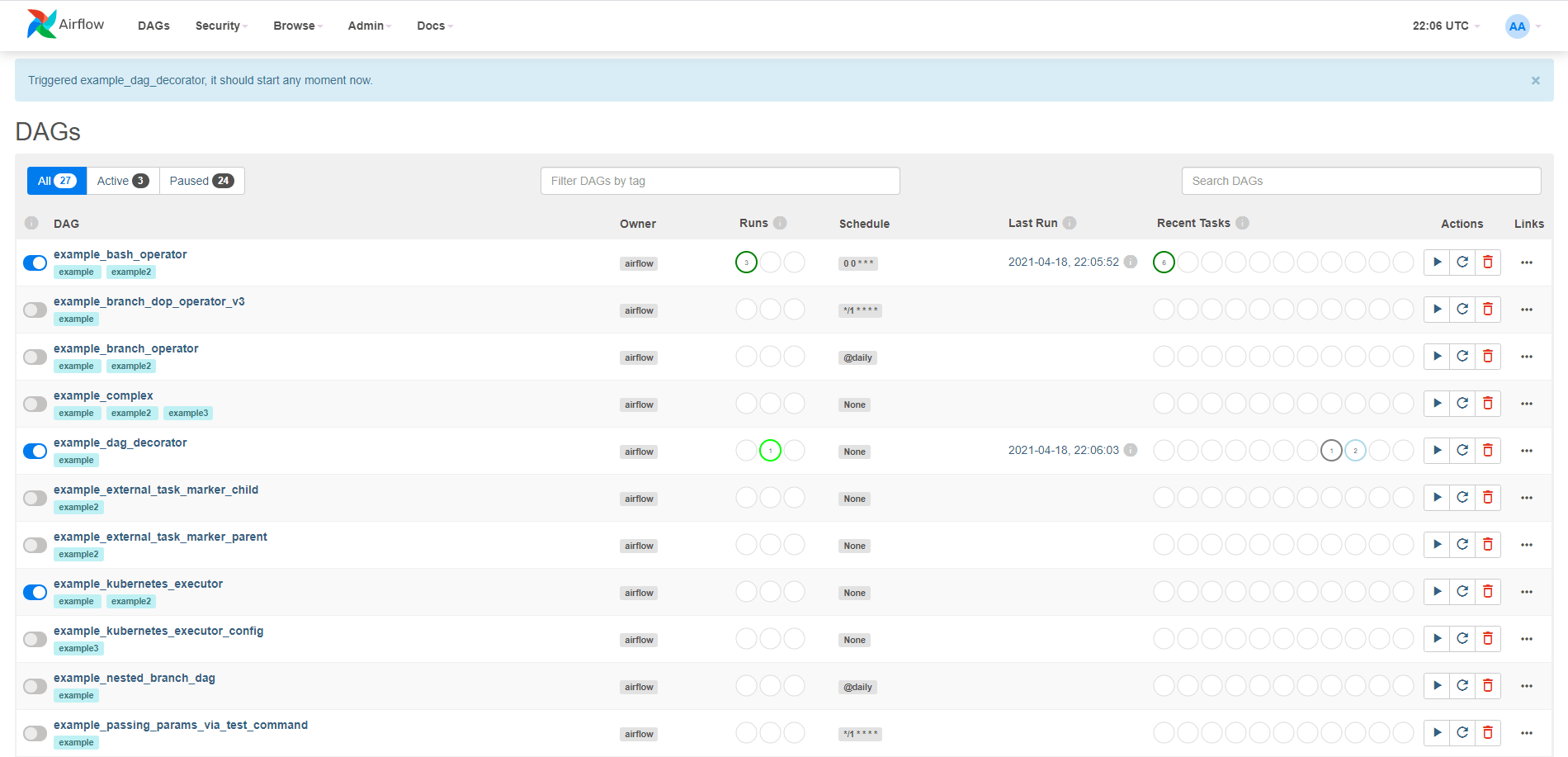

Airflow 2.0 got a totally new look based on the Flask app builder module, so now with a new dashboard it is easier to find the information you need and navigate your DAGs. This version has additional filters to facilitate the search for specific diagrams and displayed tags.

The Dag Run screen has also got a new screen layout with extra information like “Run type”, “External Trigger” or information about the applied configuration.

On the task screen, you’ll find a field with a documentation section, which can be very helpful in knowledge transfer from the development phase to the support phase. Also, a very useful “Auto-refresh” switch has appeared on the DAG screen. If you’re monitoring execution of your code on graph you can enable this is focus on other activity.

If you create a very complex DAG with many tasks, you can aggregate your tasks into logical groupings. This solution can help you identify at which stage your process is stuck. Think how hard it would be to determine in which step the ETL process has failed if your DAG has hundreds of tasks. Now you can group them into sections. You can also nest sections within a section to more easily find a problematic logic group.

Sometimes even a team of developers using coding standards has difficulty finding where connections are being used. The new version of Airflow, apart from adding new types of connections, has also got a description field which makes it easier to identify what a connection is used for.

Another interesting part is “plugins” available in the “Admin” menu. This page provides information about your installed plugins. To be honest, maybe it’s not a full management plugin engine, but it does offer information about installed extensions and help developers identify conflicts if they don’t have admin access to the system.

Airflow is used more and more often as a component of larger systems. If you consider integrating your system with airflow, know that currently you will have good API documentation. You use Swagger? Here you are. You don’t know swagger, but you know Redoc? No problem. The new version of Airflow provides well documented APIs for both components. This allows you to use Airflow without part of the user interface.

A redesigned scheduler

You might have experienced multiple DAG execution problems in previous releases due to scheduler bugs, for example:

- delays in task pick up (lag when switching from one task to the next task),

- problems with retries or distribution to workers.

The scheduler is a core functionality of Apache Airflow. In the second version of Airflow, the focus was on improving key elements to reduce delays and make it possible to run many schedulers in simultaneous mode with horizontal scaling without missing tasks while scheduler replication is in progress. The new version also optimizes resource usage as the scheduler works faster without increasing CPU or Memory. Version 2.0 provides a High-Availability manifest so if a system uses more than one scheduler, we expect zero downtime. This is possible because each scheduler does everything in independent mode.

This solution helps improve performance of executing many DAGs in parallel mode; in some tests performance increased tenfold.

REST API

Airflow can now be a full management tool. With REST API, you can check all DAGs, trigger them and manage task instances. This is only a small part of what this API can do for you. It offers a method to add new connections, but also lists them.

The API provides information about existing connections so you can use this in other systems. It is possible to read variables stored in Airflow or XCom results, so you can track the results of some task while processing a DAG. Of course, you can also easily control DAG execution, including DAG run, with the specified configuration, or you can view simple DAG representation.

Smart Sensor

Airflow 2.0 offers an extended Sensor operator named SmartSensor operator. SmartSensor operator is able to check the status of tasks in batch mode and store information about this sensor in the database. It also improves performance and resolves a previous heavy sensor issue.

DAG Versioning

The previous version of Airflow allowed you to add new tasks to an existing DAG, but it had some undesirable effects such as orphaned tasks (tasks without status) in DagRun which meant that you could find tasks added in a recent version of a DAG in the previous execution. This is why you could have some problems checking logs or viewing code assigned to the current DagRun. In version 2.0, there is additional support for storing many versions of serialized DAGs, correctly showing the relations between DagRuns and DAGs.

DAG Serialization

The new version has changed how the system server parses DAGs. In the previous version the WebServer and the Scheduler Component needed access to the DAG file. In the new version of Airflow, only the scheduler needs access to the DAG file,the scheduler parsing DAGs only needs access to the Metadata Database, and the Web Server only needs access to Metadata. From this change we get:

- High availability for the scheduler

- DAG versioning

- Faster DAG deployment

- Lazy DAG loading

- Now the web server is stateless

A new way to define DAGs

The new version provides a new way to define DAGs with the TaskFlow API. Now you can use python decorators to define your DAG.

from airflow.decorators import dag, task

from airflow.utils.dates import days_ago

@dag(default_args={'owner': 'airflow'}, schedule_interval=None, start_date=days_ago(2))

def tutorial_taskflow_api_etl():

@task

def extract():

return {"1001": 301.27, "1002": 433.21, "1003": 502.22}

@task

def transform(order_data_dict: dict) -> dict:

total_order_value = 0

for value in order_data_dict.values():

total_order_value += value

return {"total_order_value": total_order_value}

@task()

def load(total_order_value: float):

print("Total order value is: %.2f" % total_order_value)

order_data = extract()

order_summary = transform(order_data)

load(order_summary["total_order_value"])

tutorial_etl_dag = tutorial_taskflow_api_etl()

Regarding the example above, you can write simple DAGs faster in pure python with clear handle dependency. Also, XCom push is easier to use. Additionally, the new version offers task decorators and also supports a custom XCom backend.

What else?

Airflow 2.0. is not a monolithic. It was split into its core and 61 provider packages. Each of these packages is meant for a specific external service, a particular database -MySQL or Postgres or protocol (HTTP/FTP) This enables you to perform a custom Airflow installation and build a tool according to your individual requirements.

Additionally, you gain:

- Extended support for the Kubernetes Executor

- Plugin manager

- KEDA Queues

Check out our blog for more details on Data Pipeline and Airflow solutions: