-

1 April 2021

- Data Engineering

We are all surrounded by data produced by the IoT, browsers, applications, and all this stuff we use nowadays. How is this al connected with pipes? Let’s begin our journey through digital hydraulics 😊

What is a data pipeline?

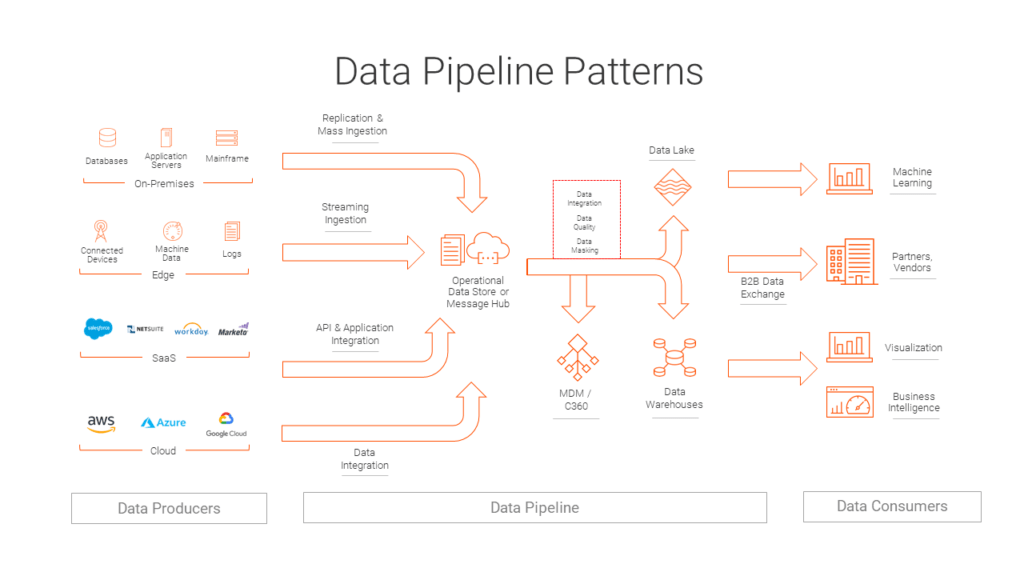

A data pipeline is a series of data ingestion and processing steps that represent the flow of data from a selected single source or multiple sources, over to a target placeholder. The target can be specified either as a data platform or an input to the next pipeline, as the beginning of the next processing steps. Generally, each time we want to process data between points A and B, there is always some kind of data pipeline behind the scenes. This may also involve multiple points, which can also be understood as separate systems.

https://blogs.bmc.com/wp-content/uploads/2020/06/data-pipeline.jpg

Data Pipeline design principles

We can isolate the following key pipeline types:

- Data science pipeline

- Data ingestion pipeline

- Data processing pipeline

- Data analysis pipeline

- Data streaming pipeline

In addition to those, we can have many more, including combinations of multiple types. However they should all follow a similar set of rules which needs to be applied for proper data processing:

Reproducibility

No matter if we have a batch or a streaming pipeline, it should be possible to restart it from the selected point in time to load data again. This may be needed due to many reasons, like lack of data, bugs, and a number of other issues.

Adaptability

There is no such thing as enough data. There will always be a need to handle more and more so data pipelines need to rely on scalable architecture.

Reliability

Understanding business and technical requirements is key to properly designing the steps of data transformation and transport. A proper data pipeline should be monitored against the source, target, and data pipe itself to identify the quality of processed data.

Auditability

It should be easy to recognize when each component of the data pipeline runs or fails to identify fix actions when needed. In general, it should be possible for data engineering teams to simply and easily identify specific steps, events, etc..

Latency

The length of time it takes to pass data from source to target.

Security

Depends on business needs and security standards around the globe – privacy is important. The rules depend on the data being processed and need to be applied at different levels for the specific countries, industries and types of data we process.

Developing data pipelines

Before actual implementation, we need to consider how we want to proceed with our data. Do we expect only batch or streaming loads? What quantity and what type of data will be processed? Do we need a lot of transformations built into the process or are we focusing on providing raw data to the target?

All those questions should be answered upfront and any doubts related to them should be properly discussed and assessed against our business needs and the technology we want to use. You can also visit our Data Pipeline Services to find out how our knowledge can benefit your business

https://blogs.informatica.com/wp-content/uploads/2019/08/Data-Processing-Pipelines_Image-1024×576.png

Once we are clear about our business needs, there are different ways to set up a proper data pipeline, and multiple tools we can use.. Let’s focus on the two most general ones:

- Coding – The most demanding and requiring programming capabilities. Typically, with the usage of dedicated frameworks and languages like SQL, Spark, pandas, Kafka, etc. Provides the ability to have full control over each step of your data pipeline like specific transformations or monitoring.

- Design tools – Based on products like Talend, Informatica or Google Dataflow, which allow you to build a pipeline with an easy-to-use interface from previously prepared components.

Despite which one you choose, you will also need the entire infrastructure which will enable your processing. Things like storage, orchestration and analytics tools depend on the technology and needs but should be considered indispensable elements of a modern data pipeline.

Conclusion

Data pipelines are at the heart of your system operations. They make it possible for data to be a valuable part of the business; therefore, the whole design and maintenance process should be well planned and executed.

DS Stream is a company with both infrastructure expertise and a strong analytical background. Our team contains specialists who can support you in picking, setting up, maintaining and tailoring solutions to your business needs and requirements.

Feel free to contact us at any time if you want to use your data more effectively in your business processes.

Check out our blog for more in-depth articles on Data Pipeline Automation: