-

11 February 2022

- Big Data

What do you know about the CAP Theorem? Distributed systems are all around us nowadays and provide businesses with more operational flexibility. But how do they really work, and what are the limitations of this architectural approach? Read our article to learn more.

New, more advanced solutions for business are always appearing on the market. They are more complex and allow users to share various types of information or files more efficiently. You have surely noticed that modern applications are usually web-based (we need network access to use them) and very often run globally. So, they need advanced solutions to provide low latency and data consistency. A great number of digital products now rely on distributed systems. Of course, no distributed system is totally flawless. With this kind of solution we have to face different challenges. Allow us to explain the CAP Theorem to you.

Traditional system – single box

The CAP Theorem refers to distributed systems, but first, let’s explain how this architectural approach differs from the traditional one. In a centralized system, there is one central server (called the master) to which one or more client nodes (slaves) are connected. It uses a client-server architecture, where clients send requests to the main server, and it responds back. The main benefit of this approach is that it is very easy to set up, secure, update and maintain (by adding or removing the connection between a master and a slave). The biggest risk is the system’s instability and the possibility of a single point of failure – any problem that affects the main server may cause the whole system to go down. Vertical scaling being the only option of adding resources is also a huge limitation. Adding a bigger box is not possible after a certain limit – there might be a load that is just too much for a single machine to handle.

Architecture of traditional centralized systems

When it comes to storing data, a traditional system is the easiest way to achieve ACID principles (Atomicity, Consistency, Isolation, Durability) – the concept on the basis of which relational databases are built. The notion of ACID means “all or nothing” – a sequence of operations that satisfies these principles is called a transaction, which in turn is treated as a single unit – it either succeeds or fails completely, there is nothing in between. Having all the data in one place (on the master server) guarantees that the database remains in a harmonious state. This centralized system then lives in a world of consistency by default.

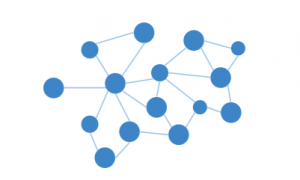

A distributed system – there is no boss

After entering the era of rapid Internet development, our business requirements changed drastically and relational databases were no longer sufficient for the storage needs of complex systems. Not only is data consistency of the main concern, but availability, performance, and scale are equally important now. The best engineers on our planet had to come up with clever solutions, among which distributed systems have gained great popularity (and the CAP Theorem refers specifically to distributed data stores). They are based on the idea that there is no longer one boss who delegates responsibilities to their team, but every member has the power to make decisions. Technically, a distributed system is a collection of independent nodes placed in different locations that are connected to each other and share information. It works as a single logical data network and appears to the end-user as if it was one computer, while behind the scenes the entire state of the system is replicated across multiple nodes.

Architecture of distributed systems

Some of the biggest benefits of a distributed system are:

- Scalability – it is possible to take advantage of both vertical and horizontal scaling by adding compute resources to each node or joining additional nodes to the network (the second is almost unlimited)

- Fault tolerance – in case of one or more nodes go down, the whole system is stable – up and running – as there are always some nodes available to serve the users

- Performance – the components of the system share resources and run concurrently, which makes it possible to balance the entire workload on all the nodes

Things get more complicated when it comes to storing data. With a distributed system, it is quite difficult to achieve consensus. When writing new records to the database, one has to decide when to consider it complete – when the data persists on one node, or only after it has been replicated everywhere. This brings us to a world where consistency is a choice.

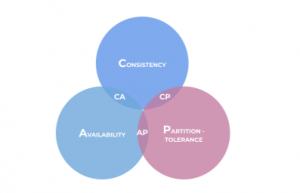

The CAP Theorem in Big Data

The CAP Theorem was created in 2000 by Dr. Eric Brewer. It refers to distributed systems and states that among consistency, availability, and partition tolerance, only two out of three can be achieved (all three dimensions may actually be imagined more as ranges than booleans).

Graphical representation of CAP Theorem

Let’s focus on the partition-tolerance first. Partition-tolerance in the CAP Theorem means that a system will keep working even when there is a network failure between two or more nodes. Keep in mind that the CAP Theorem only refers to distributed systems, so partitioning is a must. So, we can guarantee either availability and partition-tolerance (an AP system) or consistency and partition-tolerance (a CP system), but having both consistency and availability (CA) is never an option.

CAP theorem system design principles state that if we favor availability over consistency, every node continues to serve queries without error, but there is no guarantee that a response contains the most recent writes. In the other case, every request receives the most recent data, but there might be an error if it loses contact with other replicas, and we incur a latency penalty.

Remedies come from the sky – data management in the cloud

Can the CAP theorem’s database issue be solved somehow? Cloud providers offer different modern data storage solutions for handling CAP tradeoffs to get the benefits of highly scalable data stores. There is no single magic solution, as dimensions for optimization vary a lot. We can choose between a set of services, with each of them optimized for a particular workload. Let’s take a look at some examples.

Google Cloud – Cloud Spanner – consistency

Spanner is a global SQL database offered in Google Cloud. It is technically a CP system, which means that it always provides consistency. It uses TrueTime (a feature using distributed clock to assign timestamps to transactions) that allows users to do globally consistent reads across the entire database without blocking writes. However, Spanner also claims to achieve almost 100% availability – to be precise, better than ‘5 9s’ (99.999%). That’s why Spanner reasonably claims to be actually an ‘effectively CA’ system.

Amazon Web Services – Dynamo – availability

Dynamo is a high availability key-value storage system used in Amazon Web Services, which is designed to provide an ‘always-on’ experience for various Amazon offerings. Amazon S3 (object storage system), for example, is based on Dynamo’s core features to meet scaling needs and reliability requirements. Availability here can be achieved by sacrificing consistency (via the so-called ‘eventual consistency model’ – all updates reach all replicas eventually at some point in the future).

Microsoft Azure – Cosmos DB – take your choice

Cosmos DB is a globally distributed NoSQL database offered in the Azure cloud by Microsoft. Users can choose one of five pre-defined consistency models – each of them gives different rates for CAP’s fields. Choices are: Strong (favors data consistency above everything else), Bounded-stateless, Session, Consistent prefix, and Eventual (here availability is the winner). These options turn the dilemma from a ‘0-1’ type of choice to a wide spectrum of possibilities tailored to customers’ needs.

If your company is looking for a modern data storage solution to provide for all of your users a fast and responsive experience, don’t hesitate to contact us. We will help you face data management challenges and make the most out of your system.

Check out our blog for more details on Data Engineering:

- Test Driven Development in Python using Pytest

- What is database denormalization? Advantages, disadvantages and examples

- Data Pipeline definition – design and process

Bibliography:

- Brewer, Eric. Spanner, TrueTime and The CAP Theorem. 2017.

- Decandia Giuseppe, Hastorun Deniz, Jampani Madan, et al. Dynamo: Amazon’s Highly Available Key-Value Store. ACM SIGOPS operating systems review, 41(6), 205-220. 2007.

- Dimitrovich, Slavik. Architecture II: Distributed Data Stores | Amazon Web Services. 2015: https://aws.amazon.com/blogs/startups/distributed-data-stores-for-mere-mortals/

- Microsoft. Build Modern Apps with Big Data at a Global Scale. 2017. https://www.arbelatech.com/insights-resources/white-papers/build-modern-apps-with-big-data-at-a-global-scale