-

2 June 2021

- Data Streaming

Streaming data characteristic includes high volume of continuously generated data, at high velocity. In short, certain events generate data continuously and these form a data stream. The simplest examples of such events could be an IoT device sensor measuring pressure or a person clicking a link on a website or mobile application. The streaming data may have unstructured or semi-structured form, usually key-value pairs in JSON or XML.

Although streaming technologies are not new, they have been rapidly maturing over the last years. For those who are looking for clarity on how to handle data streaming, there are the aspects to be considered and assessed: what does Streaming Data Architecture mean? How does stream processing differ from real-time one? How should it be designed to handle data streaming? What could be the components of Streaming Data Architecture? What are the benefits of applying Streaming Data Architecture?

Streaming Data is a part of our Data Science Services – find out how we can help Your business

What is Streaming Data Architecture?

Streaming Data Architecture consists of software components, built and connected together to ingest and process streaming data from various sources. Streaming Data Architecture processes the data right after it is collected. The processing includes allocating it into the designated storage and may include triggering further processing steps, like analytics, further data manipulation or sort of further real-time processing.

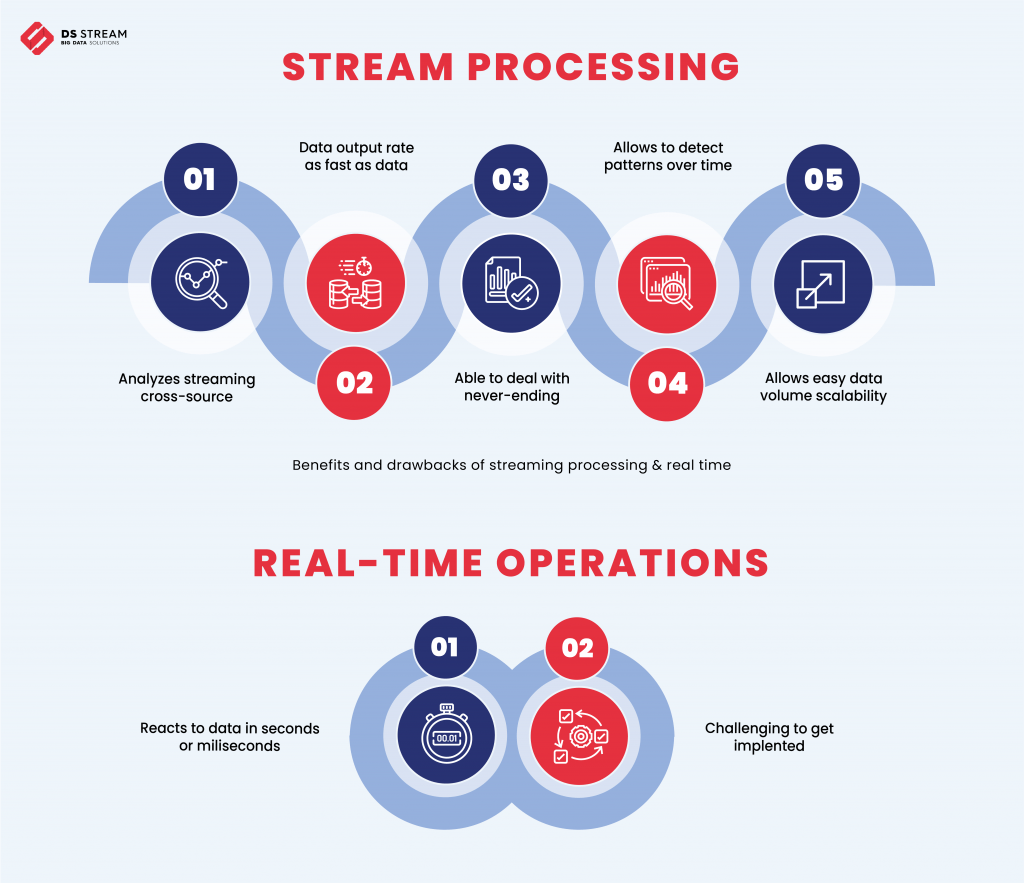

There are several approaches to data processing. First, the “old fashion” one, is batch processing which is the processing of a large volume of data all at once. Here we will however focus on the difference between stream processing and real-time operations. The simplest explanation is that real-time operations are about reactions to the data, whereas stream processing is about actions taken on the data.

Real-time solutions guarantee the execution of data in a short time period after it has been gathered. A reaction to the data is almost immediate – processing can take minutes, seconds, or even milliseconds, depending on business requirements and applied solution. Examples of the use case for real-time operation can be buying/selling operations on the stock market, when the quote needs to be provided right after order is placed.

In contrast, stream processing is about continuous computation that happens as data flows through a system. There is no compulsory time limitation other than the components power or the technology solution applied and the business tolerance to latency. It means there is no deadline for the output or reaction of the system when data comes over, so the data is processed as it comes. The success of this architecture depends on two things: over the long term, output rates need to be at least equal to input rates and it must have enough memory to store queued inputs required for the computation. Stream processing is beneficial in case of frequent events to be tracked or if the events need to be detected right away and responded to quickly. Example use cases would be cybersecurity or fraud detection.

Streaming processing is usually a complex challenge. To leverage this technology for your benefit you will require a solution consisting of multiple components. During designing the architecture you also need to take the characteristics of data streams into account. They tend to generate a huge volume of data and require further pre-processing, extraction and transforming to become more useful.

What could be the components and the design of Streaming Data Architecture?

Regardless of platform used, the streaming data architecture needs to include the following key component groups:

1. Message Brokers

The component group that takes data from a source, transforms it into a standard message format, and streams it on an ongoing basis, to make it available for use, which means that other tools can listen in and consume the messages passed on by the brokers. The popular stream processing tools are open source software Apache Kafka, or PaaS (Platform as a Service) components like Azure Event Hub, Azure IoT Hub, GCP Cloud Pub/Sub or GCP Confluent Cloud, which is cloud-native event streaming platform powered by Apache Kafka. Microsoft Azure also supports Kafka as HDInsight cluster type, so this way it can be used as PaaS.

On top of the examples mentioned above, there are also other tools available, like Solace PubSub+ or Mulesoft Anypoint, which are usually built on top of open source components, to provide a complete, multi-cloud integration environment, supporting among others streaming data and allowing to remove overhead related to platform setup and maintenance.

2. Processing Tools

The output data streams from the above described message broker or stream processor needs to be transformed and structured to get analyzed further using analytics tools. The result of such analysis can be some actions, alerts, dynamic dashboards or even new data streams.

When it comes to open source frameworks, which focus on processing streamed data, the most popular and broadly known are Apache Storm, Apache Spark Streaming and Apache Flink. Microsoft Azure supports deployment of Apache Spark and Apache Storm as a type of HDInsight cluster. On top of that Azure provides its proprietary solution called Stream Analytics, which is a pure PaaS component of Azure, acting as real-time analytics and complex event-processing engine that is designed to analyze and process high volumes of fast streaming data from multiple sources simultaneously. So, it falls a little bit under data analytics as well as real-time processing.

In the case of GCP the key platforms for streamed data processing are Dataproc, which includes Spark and Flink and a separate proprietary solution, which is Dataflow.

3. Data Analytics Tools

Once streaming data is prepared for consumption by the stream processor and processing tool, it needs to be analyzed to provide value. There are many different approaches to streaming data analytics, but let’s focus on the mostly known ones.

Apache Cassandra is an open source NoSQL distributed database and it provides low latency serving of streaming events to applications. Kafka streams can be processed and persisted to a Cassandra cluster. It is also possible to implement another Kafka instance that receives a stream of changes from Cassandra and serves them to other applications for real time decision making.

Another example is Elasticsearch, which can receive streamed data from Kafka topics directly. With Avro data format and a schema registry, Elasticsearch mappings are created automatically and rapid text search or analytics within Elasticsearch can be performed.

Azure also provides CosmosDB with Cassandra API, so Apache Cassandra capabilities are secured in this cloud. GCP supports the area with Firebase Realtime Database, Firestore as well as BigTable.

4. Streaming Data Storage

Storage cost is in general relatively low, therefore organizations store their streaming data. A data lake is the most flexible and cheap option for storing event data, but it is quite challenging to properly set it up and maintain. It may include relevant data processing, data partitioning and backfilling with historical data, so at the end creating an operational data lake may become a challenge.

All cloud vendors provide relevant components serving as data lakes. Azure provides Azure Data Lake Store (ADLS) and GCP has Google Cloud Storage.

The other option can be storing the data in a data warehouse or in persistent storage of selected tools, like Kafka, Databricks/Spark, BigQuery.

Concluding the topic of Streaming Data Architecture, exact components selection depends on available stack and it can potentially look like the example below, which is the modern streaming data architecture based on Microsoft Azure.

To sum up

A modern Streaming Data Architecture has some important benefits, which should be taken into account when designing particular solutions. When it is properly designed, it eliminates the need for large data engineering, its performance is high, it can be deployed quickly, with built in high availability and fault tolerance. That architecture also is flexible to support multiple use cases, with low total cost of ownership.

To achieve the above, organizations may go for full stack solutions or develop relevant architecture blueprints, to ensure fast and robust delivery of solutions, tailored to business needs.

This may interest you: