You use Databricks Workspace and notebooks for analyzing and processing data, but when it comes to complex applications, is it no longer an effective approach? You can connect to a Databricks Cluster from your local IDE in order to run your code. Learn how to do it here.

Intro

Thanks to Databricks Workspace and notebooks, we are able to quickly analyze and transform data. This is a perfect solution as long as we use it for ad hoc tasks or not complicated applications. However, for more complex work, developing code in a browser in the form of a notebook is exhausting and ineffective. The longer the notebook is, the harder the code is to read and the environment and environment is less responsive when trying to use “autocomplete” or add cells. Fortunately, we are able to write code and run it directly from the local IDE. In this article, we will go step by step on how to connect to a databricks cluster and run spark code using a “databricks connector”.

Implementation

Ensure the efficiency of your work by preparing your development environment properly, which is essential for successfully connecting to a Databricks Cluster from a local IDE.

Preparing environment

The appropriate version of Python in your development environment is required to connect to your cluster in Databricks. For example, if you connect to a cluster with Databricks Runtime 7.3 LTS, you must use Python version 3.7. At this point, it is important to mention that you cannot connect to all DB Runtimes (limitations will be described later in this article). A full list of supported Runtimes along with corresponding Python versions can be found here.Another important thing is to have Java Runtime Environment 8 (JRE 8) installed. You can use apt (Ubuntu/Debian distro) to install it

Furthermore, the databricks-connect package conflicts with PySpark. So if your development py environment contains it, please remove it using the code below

is also strongly suggested that you use a clean python environment using venv, conda or others. This approach will allow you to configure connections for multiple clusters/workspaces from one place.

Connection to cluster

So how do you connect with databricks cluster? Once our local environment is ready we can install the databricks-connect package. The client version should be the same as Databricks Runtime. In our case it will be 7.3:

Please note that the client version is “X.Y.*”, where X.Y corresponds to Databricks Runtime (7.3) and suffix “.*” is a package version. Using “*” ensures that you always use the latest version.

Once installation is successful, it is time to configure your connection. Before that, you need to prepare a few things:

- Workspace url (Databricks host)

- Cluster ID

- Organization ID (Org ID) (Azure only!)

- Personal token

- Connection port

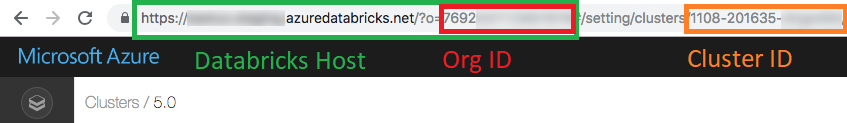

The first three parameters we can find in the url of the cluster we want to connect to. In your browser open “Compute” and then the cluster that you want to connect to.

You can generate a personal token in “User settings”. You can find how to get it here.The default connection port is 15001. If it has been changed you can probably find it in “Advanced Options” under the “Spark” tab (config key “spark.databricks.service.port”).Now when all required parameters are ready, we can configure the databricks connection client, run:

Next, provide the prompted parameters. After that you can check the connection:

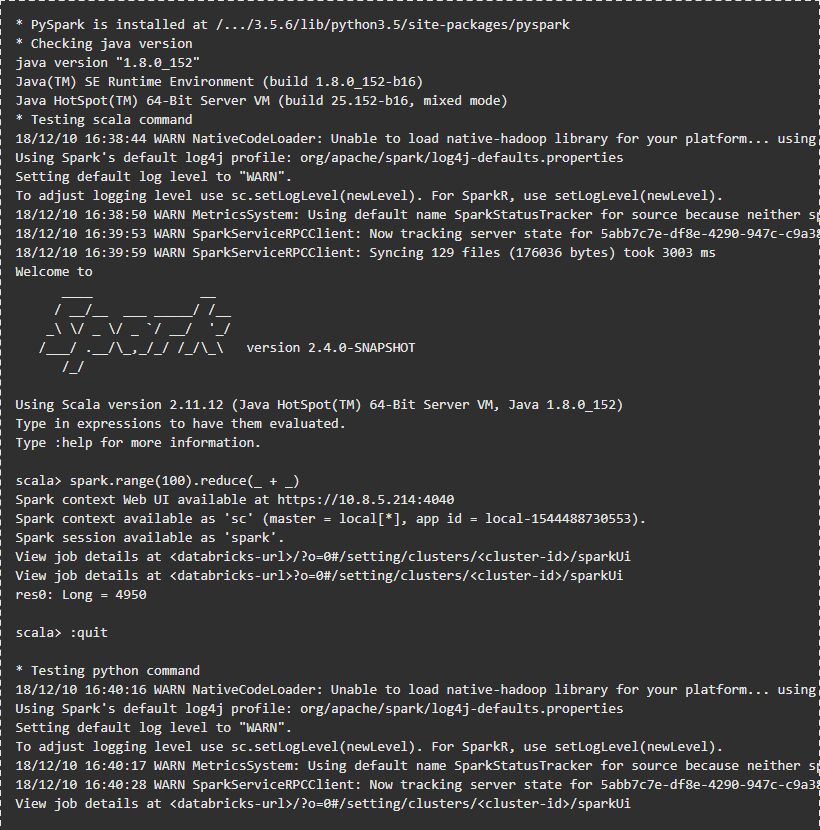

The output should look like this:

Running exemplary code

Integration with VSC IDE

Before running some code on a Databricks cluster, you need to integrate the databricks-connector with the IDE. In my case, it will be VisualVirtual Studio Code. Integrating a connector with VSC is really easy because all you need to do is point your IDE to the python environment where the databricks-connector is installed. When you run the PpysSpark code, the connector will execute it on the DB cluster.

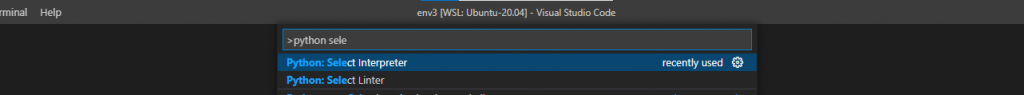

To change the python interpreter in VSC, press F1 and start typing “Python: Select Interpreter”.

Next, choose python env from the list or provide a path where a Python executable file is placed.

Now you are ready to run your code from the IDE on the DB Cluster. The databricks connector will recognize pyspark code, and it will run it on a cluster. If the code doesn’t contain pyspark, execution will take place locally. Below you can see an example of code that contains a pyspark snippet which will be executed on the DB Cluster

Take note that the spark session needs to be created first. When we run some code directly on databricks workspace, the spark session is already initialized. You can find this code in this repository.

Not only VSC!

You can integrate planty IDEs, e.g. PyCharm, Eclipse or Jupyter Notebook. You can find instructions with configuration of other IDEs here.

Access to DBUtils

DButils is a very helpful module which can be used running notebooks. With its help, you can read secrets or execute FS commands on DBFS. There is also a possibility to use “dbutils” in the same way as in databricks notebooks. One more library needs to be installed and the dbutils object needs to be created.In order to complete this task, install the “six” package on your environment:

and now we are able to use fs and secrets:

You can find this example in the repository.

Access your code from the DB Workspace

We are able to download and upload notebooks from the DB Workspace using the databricks CLI. To install the CLI, run in your terminal:

To configure databricks CLI, we need the workspace URL and token. I’ve described above how to get it. Both of them have to be passed during configuration:

Next, import and export commands should be used:

Databricks CLI can also be used to create clusters, manage dbfs, etc. You can find a full list of commands here.

Not only Py!

You can also configure your environment and IDE to run R code and Scala!You can find more information in the following links:

Limitations

Unfortunately, there are some limitations on the use of the databricks-connector. First of all, we can use only limited DB Runtime clusters. At the time when this article was written they were: 9.1 LTS, 7.3 LTS, 6.4 and 5.5 LTS. The only available options are fs and secrets modules from dbutils. Library, notebook, or widgets modules are not supported in databricks-connector. Moreover, it is not possible to connect to clusters with Table access control.These are the most influential constraints. You can find the full list in the following links:

Summary

As you can see, setting up a “databricks connect” package is simple and greatly speeds up development using your favorite IDE. All the code you need can be found in a specially created GitHub repository. Check out our blog to learn more about Databricks Clusters. Check out our blog for more in-depth articles on Databricks:

- Capture Databricks’ cells truncated outputs

- Introduction to koalas and databricks

- Databricks testing with GitHub Actions